class ChatMessages:

def __init__(self):

"""Initializes the Chat."""

self._messages = []

def _append_message(self, role, content):

"""Appends a message with specified role and content to messages list."""

self._messages.append({"role": role, "content": content})

def append_system_message(self, content):

"""Appends a system message with specified content to messages list."""

self._append_message("system", content)

def append_user_message(self, content):

"""Appends a user message with specified content to messages list."""

self._append_message("user", content)

def append_assistant_message(self, content):

"""Appends an assistant message with specified content to messages list."""

self._append_message("assistant", content)

def get_messages(self):

"""Returns a shallow copy of the messages list."""

return self._messages[:]Let’s build a light-weight chat for llama2 from scratch which can be reused in your Jupyter notebooks.

Working exploratively with large language models (LLMs), I wanted to not only send prompts to LLMs, but to chat with the LLM from within my Jupyter notebook. It turns out, that building a chat from scratch is not complicated. While I use a local llama2 model in this notebook, the concepts I describe are universal and also transfer to other implementations.

Before we get started: If you want to interactively run through this blog post, please check out the corresponding Jupyter notebook.

Building Chat Messages

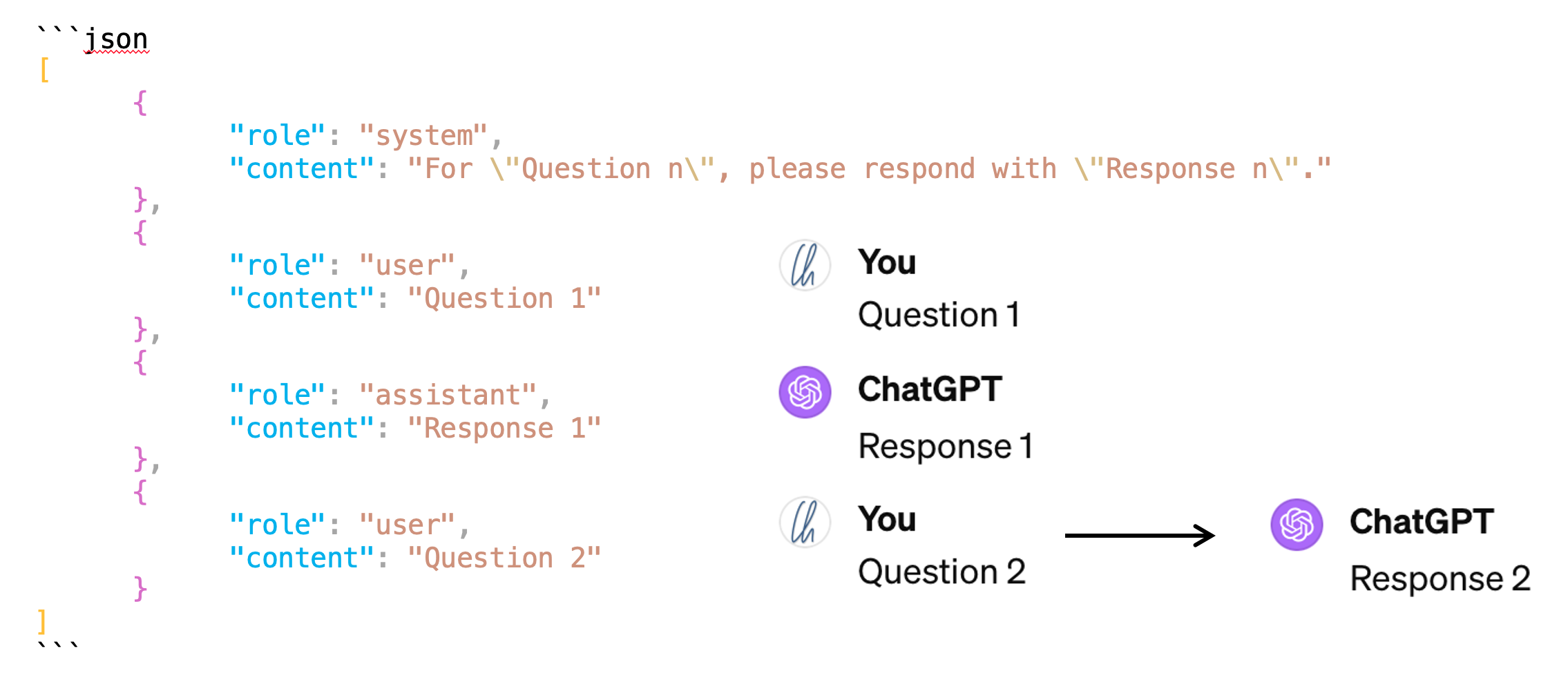

“Chat Messages” are the messages you exchange with the LLM. There are 3 roles:

- The

system-message gives overall instructions to the LLM which should be used during the whole chat. It only appears once. User-messages are the messages (“prompts”) you send to the LLM.- The LLM replies with

assistant-messages.

The chat messages need to be passed to the LLM via the API in a JSON format. Since the chat is stateless, you need to pass the whole previous conversation to be able to ask follow-up questions. The following animated GIF shows the structure in analogy to ChatGPT. Additionally, it animates how the messages build up over time during the chat.

Based on that, let’s build a class which contains and manages the chat messages:

The 3 methods append_system_message, append_user_message, and append_assistant_message call the private method _append_message so that there is no confusion as to which message types can be added. The get_messages-method returns a copy of the chat messages, making sure that it is not possible to access the private _messages attribute of ChatMessages.

Let’s quickly re-create the example shown on the image above:

chat_messages = ChatMessages()

chat_messages.append_system_message("For \"Question n\", please respond with \"Response n\"")

chat_messages.append_user_message("Question 1")

chat_messages.append_assistant_message("Response 1")

chat_messages.append_user_message("Question 2")

chat_messages.append_assistant_message("Response 2")import json

from pygments import highlight

from pygments.lexers import JsonLexer

from pygments.formatters import TerminalFormatter

# Convert messages to a formatted JSON string

json_str = json.dumps(chat_messages.get_messages(), indent=4)

# Highlight the JSON string to add colors

print(highlight(json_str, JsonLexer(), TerminalFormatter()))[

{

"role": "system",

"content": "For \"Question n\", please respond with \"Response n\""

},

{

"role": "user",

"content": "Question 1"

},

{

"role": "assistant",

"content": "Response 1"

},

{

"role": "user",

"content": "Question 2"

},

{

"role": "assistant",

"content": "Response 2"

}

]

Building Chat Version 1

We have seen in my previous blog post how we can prompt the llama2 model by calling the create_chat_completion-method.

The class Llama2ChatVersion2 simplifies prompting the LLM by doing the following:

- Upon initialization of the chat object, the chat messages are initialized and the system message is added.

- Whe prompting llama2, both the prompt (the user message) and the response (the assistant message) are added to the chat messages.

- The plain text returned from llama2 is formatted for better readability.

from IPython.display import Markdown, clear_output

class Llama2ChatVersion2:

def __init__(self, llm, system_message):

"""Initializes the Chat with the system message."""

self._llm = llm

self._chat_messages = ChatMessages()

self._chat_messages.append_system_message(system_message)

def _get_llama2_response(self):

"""Returns Llama2 model response for given messages."""

self.model_response = self._llm.create_chat_completion(self._chat_messages.get_messages())

return self.model_response['choices'][0]['message']['content']

def _format_markdown_with_style(self, text, font_size=16):

"""Wraps text in a <span> with specified font size, defaults to 16."""

return f"<span style='font-size: {font_size}px;'>{text}</span>"

def prompt_llama2(self, user_prompt):

"""Processes user prompt, displays Llama2 response formatted in Markdown."""

self._chat_messages.append_user_message(user_prompt)

llama2_response = self._get_llama2_response()

self._chat_messages.append_assistant_message(llama2_response)

display(Markdown(self._format_markdown_with_style(llama2_response)))Let’s create a llama2 instance and prompt it:

from llama_cpp import Llama

#llm = Llama(model_path="../models/Llama-2-7b-chat/llama-2-7b-chat.Q4_K_M.gguf", n_ctx=2048, verbose=False)

llm = Llama(model_path="../../../lm-hackers/models/llama-2-7b-chat.Q4_K_M.gguf", n_ctx=2048, verbose=False)chat = Llama2ChatVersion2(llm, "Answer in a very concise and accurate way")

chat.prompt_llama2("Name the planets in the solar system")Sure! Here are the names of the planets in our solar system, listed in order from closest to farthest from the Sun:

- Mercury

- Venus

- Earth

- Mars

- Jupiter

- Saturn

- Uranus

- Neptune

The result looks good, but from a useability perspective it is not great, because you have to wait for the whole response to be completed before you see an output. On my machine this takes a few seconds for this short reply. Especially for longer answers, however, you might start to wonder if the process is running correctly, or is it just the impatient me?

In any case, it would be nice to see the model’s response streamed, i.e. you see the model writing the answer world-by-word / token-by-token, the same way you are used to seeing ChatGPT print out its answers.

How Streaming Works

The following cell contains an illustrative example of how streaming works: The function mock_llm_stream() returns a generator object because it does not return a result, but it yields results. This means that the code is not executed when the function is called, but it only returns a generator object which lazily returns values when it is iterated over. The for-loop iterates over the generator object, and each iteration returns a word after some latency, simulating the token generation by the LLM.

import time

def fetch_next_word(words, current_index):

"""

Mock function to simulate making an API call to fetch the next word from the LLM.

"""

# Simulate network delay or processing time

time.sleep(0.5)

if current_index < len(words):

return words[current_index]

else:

raise StopIteration("End of sentence reached.")

def mock_llm_stream():

sentence = "This is an example for a text streamed via a generator object."

words = sentence.split()

current_index = 0

while True:

try:

# Simulate fetching the next word from the LLM

word = fetch_next_word(words, current_index)

yield word

current_index += 1

except StopIteration:

break

# Capture the generator function in a variable

mock_llm_response = mock_llm_stream()

# Example of how to use this mock_llm_response

for word in mock_llm_response:

print(word, end=" ")This is an example for a text streamed via a generator object. So let’s stream a response from llama2:

messages=[

{"role": "system", "content": "Answer in a very concise and accurate way"},

{"role": "user", "content": "Name the planets in the solar system"}]

model_response = llm.create_chat_completion(messages = messages, stream=True)

complete_response = ""

for part in model_response:

# Check if 'content' key exists in the 'delta' dictionary

if 'content' in part['choices'][0]['delta']:

content = part['choices'][0]['delta']['content']

print(content, end='')

complete_response += content

else:

# Handle the case where 'content' key is not present

# For example, you can print a placeholder or do nothing

# print("(no content)", end='')

pass Sure! Here are the names of the planets in our solar system, listed in order from closest to farthest from the Sun:

1. Mercury

2. Venus

3. Earth

4. Mars

5. Jupiter

6. Saturn

7. Uranus

8. NeptuneChat Version 2 - Streaming included

Let’s include the streaming functionality into our chat messages- and chat-classes. For this we are going to use a nice trick from fastcore to add the 2 new methods: We can @patch the methods into the class:

from fastcore.utils import * #for importing patch@patch

def _get_llama2_response_stream(self:Llama2ChatVersion2):

"""Returns generator object for streaming Llama2 model responses for given messages."""

return self._llm.create_chat_completion(self._chat_messages.get_messages(), stream=True)

@patch

def prompt_llama2_stream(self:Llama2ChatVersion2, user_prompt):

"""Processes user prompt in streaming mode, displays updates in Markdown."""

self._chat_messages.append_user_message(user_prompt)

llama2_response_stream = self._get_llama2_response_stream()

complete_stream = ""

for part in llama2_response_stream:

# Check if 'content' key exists in the 'delta' dictionary

if 'content' in part['choices'][0]['delta']:

stream_content = part['choices'][0]['delta']['content']

complete_stream += stream_content

# Clear previous output and display new content

clear_output(wait=True)

display(Markdown(self._format_markdown_with_style(complete_stream)))

else:

# Handle the case where 'content' key is not present

pass

self._chat_messages.append_assistant_message(complete_stream)Now we can use the method prompt_llama2_stream to get a more interactive response:

chat = Llama2ChatVersion2(llm, "Answer in a very concise and accurate way")

chat.prompt_llama2_stream("Name the planets in the solar system")Sure! Here are the names of the planets in our solar system, listed in order from closest to farthest from the Sun:

- Mercury

- Venus

- Earth

- Mars

- Jupiter

- Saturn

- Uranus

- Neptune

Just for the fun of it, let’s continue the chat:

chat.prompt_llama2_stream("Please reverse the list")Of course! Here are the names of the planets in our solar system in reverse order, from farthest to closest to the Sun: 1. Neptune 2. Uranus 3. Saturn 4. Jupiter 5. Mars 6. Earth 7. Venus 8. Mercury

chat.prompt_llama2_stream("Please sort the list by the mass of the planet")Sure! Here are the names of the planets in our solar system sorted by their mass, from lowest to highest: 1. Mercury (0.38 Earth masses) 2. Mars (0.11 Earth masses) 3. Venus (0.81 Earth masses) 4. Earth (1.00 Earth masses) 5. Jupiter (29.6 Earth masses) 6. Saturn (95.1 Earth masses) 7. Uranus (14.5 Earth masses) 8. Neptune (17.1 Earth masses)

Looping back to the beginning, you can see how the chat is represented in the chat massages.

chat._chat_messages.get_messages()[{'role': 'system', 'content': 'Answer in a very concise and accurate way'},

{'role': 'user', 'content': 'Name the planets in the solar system'},

{'role': 'assistant',

'content': ' Sure! Here are the names of the planets in our solar system, listed in order from closest to farthest from the Sun:\n\n1. Mercury\n2. Venus\n3. Earth\n4. Mars\n5. Jupiter\n6. Saturn\n7. Uranus\n8. Neptune'},

{'role': 'user', 'content': 'Please reverse the list'},

{'role': 'assistant',

'content': ' Of course! Here are the names of the planets in our solar system in reverse order, from farthest to closest to the Sun:\n1. Neptune\n2. Uranus\n3. Saturn\n4. Jupiter\n5. Mars\n6. Earth\n7. Venus\n8. Mercury'},

{'role': 'user', 'content': 'Please sort the list by the mass of the planet'},

{'role': 'assistant',

'content': ' Sure! Here are the names of the planets in our solar system sorted by their mass, from lowest to highest:\n1. Mercury (0.38 Earth masses)\n2. Mars (0.11 Earth masses)\n3. Venus (0.81 Earth masses)\n4. Earth (1.00 Earth masses)\n5. Jupiter (29.6 Earth masses)\n6. Saturn (95.1 Earth masses)\n7. Uranus (14.5 Earth masses)\n8. Neptune (17.1 Earth masses)'}]Conclusion

In this blog post, we implemented an LLM chat from scratch in a very light-weight format. We learned how the chat messages need to be handled to create the chat experience and we even added streaming support.

And the best thing, we can re-use this chat functionality in other notebooks without having to re-write it or copy&paste again, keeping our notebooks dry and clean. I have moved the core code of this notebook to a separate .py-file, and this notebook demonstrates how to re-use the notebook chat in another notebook. 😀