After Cat vs. Dog, this is the next challenge for me in computer vision: Building on chapter 4 of the book, I challenged myself to implement a model based on the MNIST dataset, as recommended as further research.

This challenge is also available as a Kaggle competition, and I found this bit of competitive spirit to add some spice to the project. Additionally, it broadened the spectrum of implementation topics, because training a model is one thing, but using it for meaningful predictions in equally important and also required some effort. Last, but not least, submitting the results was a nice way to check if the results are actually correct. As predicted: “This was a significant project and took you quite a bit of time to complete! I needed to do some of my own research to figure out how to overcome some obstacles on the way”.

I took an iterative approach, following a similar path as for working on the Titanic-Challenge:

- First, I implemented a Fast.AI version without making a submission to get a feeling of the data and also to get a result quickly.

- Afterwards, I made my first submission. This proved to be a challenge, mainly because of the data format (more below). Only then, I could make the submission.

- I was not 100% satisfied with my first submission, because the data handling was not very elegant, so I re-implemented it.

- The above steps set me up for the implementation of a from-scratch version in analogy to chapter 4 (where in the book there is “only” a model for distinguishing 3s and 7s).

- Update Nov 28: Just for esthetics: I exchanged the cover image from this blog post. A collection of MNIST digits is nothing revolutionary, but coding the image in this notebook was a nice exercise.

It was a challenging project, and I learned a lot on the way. Below are some key points and learnings.

The Fast.AI version without a submission

By now this is pretty straight-forward for me. I just copy&pasted a few lines of code to do the training, and I was able to create a decent model very quickly in this notebook.

The catch with this version is, however, that it is not ready for the mass data load: 28.000 predictions need to be done in the competition - something which I addressed in my second iteration.

Additionally, I found it interesting that the MNIST dataset was already pushing the limits of my laptop: The training time of about 40 minutes was ok, but it is already quite a burden if it needs to be done multiple times. Moving the learning to Paperspace, training on a free GPU, was 10x faster (no surprise). Since I like to still have everything locally, it is quite convenient moving files back and forth via git, also for the .pkl-files. This way the training can be done with GPU, and the inference can be done locally. Interestingly, in all my other notebooks, local performance was not an issue. (But I expect that to change in future other projects)

Resubmitting: Working with the csv-files

I found not very elegant to just convert the csv-files to png-images. That seems convenient, but a bit wasteful. Therefore, I re-implemented the process this notebook.

It was surprisingly difficult to convert the data into the right formal in memory so that the learner would accept the image. But finally I was able to convert a PIL.Image.Image to fastai.vision.core.PILImage. As usual with these things, once it was done, it looks easy.

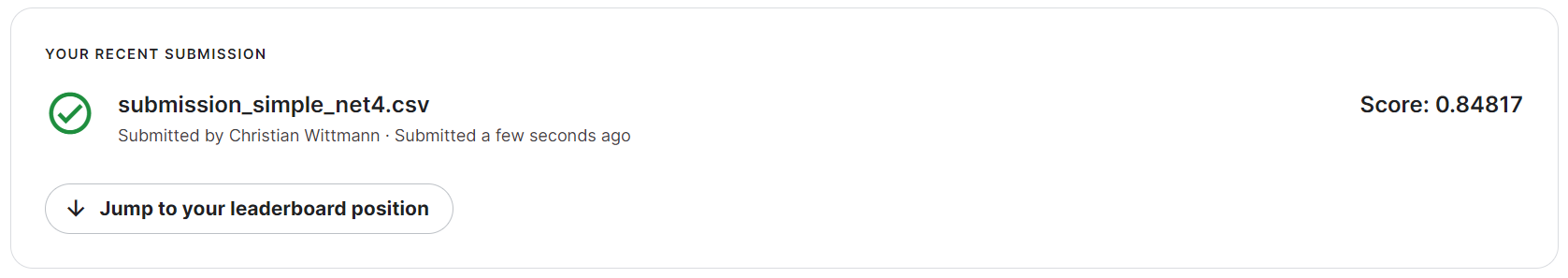

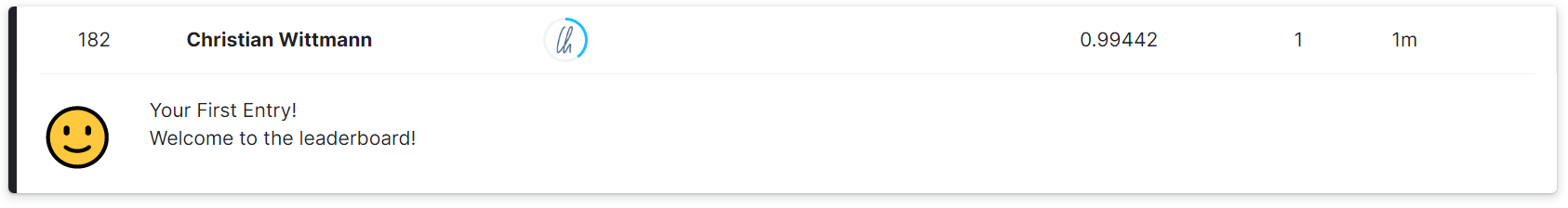

Not surprisingly, but a nice way to verify the result, the submission score was the same:

My first submission

When I first downloaded the Kaggle data, I was quite surprised to see that the download did not contain any image files, but just 2 large csv-files. Since I only knew how to handle images, I simply converted the data to png-images in this notebook.

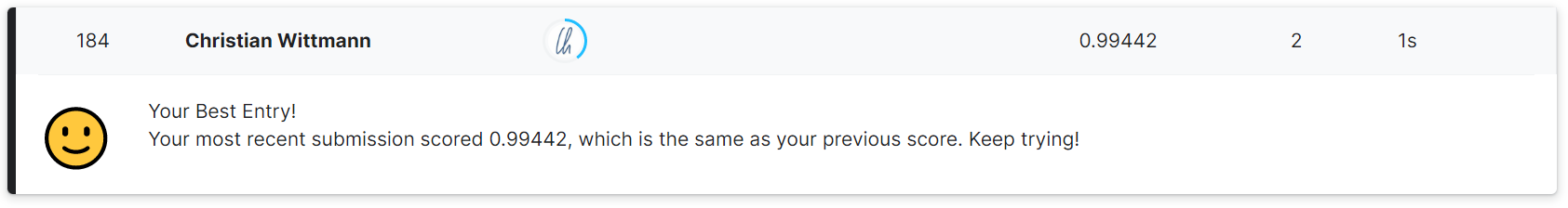

Once that was done, I could take the model trained before in my first notebook to make my first submission. In this notebook, I took the converted images and collected the predictions. I found the result of 99.4% quite impressive.

The from-scratch version

Doing it all from scratch was an interesting learning exercise because I think that I already had a good understanding of what needed to be done even before implementing it. But, as it turns out, there is this tremendous difference between thinking that you understood it, and actually implementing it. There is a lot of fine print, and you have to pay attention to the details: Formatting the data, getting it into the correctly shaped tensors, and implementing the gradient descent. Irrespective of what I had learned/understood before, this has greatly deepened and solidified by implementing the MNIST challenge.

Some minor mysteries remain, which I also documented in the notebook, if you can guide me how to fix them, please let me know.

The finale result of my from scratch-version is not up to the first implementation with resnet18, but I am proud of it for other reasons ;).